In papers around volatility and cash (discrete) dividends, we often encounter the example of the flat volatility surface. For example, the OpenGamma paper presents this graph:

It shows that if the Black volatility surface is fully flat, there are jumps in the pure volatility surface (corresponding to a process that includes discrete dividends in a consistent manner) at the dividend dates or equivalently if the pure volatility surface is flat, the Black volatility jumps.

This can be traced to the fact that the Black formula does not respect C(S,K,Td-) = C(S,K-d,Td) as the forward drops from F(Td-) to F(Td-)-d where d is dividend amount at td, the dividend ex date.

Unfortunately, those examples are not very helpful. In practice, the market observables are just Black volatility points, which can be interpolated to volatility slices for each expiry without regards to dividends, not a full volatility surface. Discrete dividends will mostly happen between two slices: the Black volatility jump will happen on some time-interpolated data.

While the jump size is known (it must obey to the call price continuity), the question of how one should interpolate that data until the jump is far from trivial even using two flat Black volatility slices.

The most logical is to consider a model that includes discrete dividends consistently. For example, one can fully lookup the Black volatility corresponding the price of an option assuming a piecewise lognormal process with jumps at the dividend dates. It can be priced by applying a finite difference method on the PDE. Alternatively, Bos & Vandermark propose a simple spot and strike adjusted Black formula that obey the continuity requirement (the Lehman model), which, in practice, stays quite close to the piecewise lognormal model price. Another possibility is to rely on a forward modelling of the dividends, as in Buehler (if one is comfortable with the idea that the option price will then depend ultimately on dividends past the option expiry).

Recently, a Wilmott article suggested to only rely on the jump adjustment, but did not really mention how to find the volatility just before or just after the dividend. Here is an illustration of how those assumptions can change the volatility in between slices using two dividends at T=0.9 and T=1.1.

In the first graph, we just interpolate linearly in forward moneyness the pure vol from the Bos & Vandermark formula, as it should be continuous with the forward (the PDE would give nearly the same result) and compute the equivalent Black volatility (and thus the jump at the dividend dates).

In the second graph, we interpolate linearly the two Black slices, until we find a dividend, at which point we impose the jump condition and repeat the process until the next slice. We process forward (while the Wilmott article processes backward) as it seemed a bit more natural to make the interpolation not depend on future dividends. Processing backward would just make the last part flat and first part down-slopping. On this example backward would be closer to the Bos Black volatility, but when the dividends are near the first slice, the opposite becomes true.

While the scale of those changes is not that large on the example considered, the choice can make quite a difference in the price of structures that depend on the volatility in between slices. A recent example I encountered is the variance swap when one includes adjustment for discrete dividends (then the prices just after the dividend date are used).

To conclude, if one wants to use the classic Black formula everywhere, the volatility must jump at the dividend dates. Interpolation in time is then not straightforward and one will need to rely on a consistent model to interpolate. It is not exactly clear then why would anyone stay with the Black formula except familiarity.

Tuesday, November 25, 2014

Flat Volatility Surfaces & Discrete Dividends

In papers around volatility and cash (discrete) dividends, we often encounter the example of the flat volatility surface. For example, the OpenGamma paper presents this graph:

It shows that if the Black volatility surface is fully flat, there are jumps in the pure volatility surface (corresponding to a process that includes discrete dividends in a consistent manner) at the dividend dates or equivalently if the pure volatility surface is flat, the Black volatility jumps.

This can be traced to the fact that the Black formula does not respect C(S,K,Td-) = C(S,K-d,Td) as the forward drops from F(Td-) to F(Td-)-d where d is dividend amount at td, the dividend ex date.

Unfortunately, those examples are not very helpful. In practice, the market observables are just Black volatility points, which can be interpolated to volatility slices for each expiry without regards to dividends, not a full volatility surface. Discrete dividends will mostly happen between two slices: the Black volatility jump will happen on some time-interpolated data.

While the jump size is known (it must obey to the call price continuity), the question of how one should interpolate that data until the jump is far from trivial even using two flat Black volatility slices.

The most logical is to consider a model that includes discrete dividends consistently. For example, one can fully lookup the Black volatility corresponding the price of an option assuming a piecewise lognormal process with jumps at the dividend dates. It can be priced by applying a finite difference method on the PDE. Alternatively, Bos & Vandermark propose a simple spot and strike adjusted Black formula that obey the continuity requirement (the Lehman model), which, in practice, stays quite close to the piecewise lognormal model price. Another possibility is to rely on a forward modelling of the dividends, as in Buehler (if one is comfortable with the idea that the option price will then depend ultimately on dividends past the option expiry).

Recently, a Wilmott article suggested to only rely on the jump adjustment, but did not really mention how to find the volatility just before or just after the dividend. Here is an illustration of how those assumptions can change the volatility in between slices using two dividends at T=0.9 and T=1.1.

In the first graph, we just interpolate linearly in forward moneyness the pure vol from the Bos & Vandermark formula, as it should be continuous with the forward (the PDE would give nearly the same result) and compute the equivalent Black volatility (and thus the jump at the dividend dates).

In the second graph, we interpolate linearly the two Black slices, until we find a dividend, at which point we impose the jump condition and repeat the process until the next slice. We process forward (while the Wilmott article processes backward) as it seemed a bit more natural to make the interpolation not depend on future dividends. Processing backward would just make the last part flat and first part down-slopping. On this example backward would be closer to the Bos Black volatility, but when the dividends are near the first slice, the opposite becomes true.

While the scale of those changes is not that large on the example considered, the choice can make quite a difference in the price of structures that depend on the volatility in between slices. A recent example I encountered is the variance swap when one includes adjustment for discrete dividends (then the prices just after the dividend date are used).

To conclude, if one wants to use the classic Black formula everywhere, the volatility must jump at the dividend dates. Interpolation in time is then not straightforward and one will need to rely on a consistent model to interpolate. It is not exactly clear then why would anyone stay with the Black formula except familiarity.

It shows that if the Black volatility surface is fully flat, there are jumps in the pure volatility surface (corresponding to a process that includes discrete dividends in a consistent manner) at the dividend dates or equivalently if the pure volatility surface is flat, the Black volatility jumps.

This can be traced to the fact that the Black formula does not respect C(S,K,Td-) = C(S,K-d,Td) as the forward drops from F(Td-) to F(Td-)-d where d is dividend amount at td, the dividend ex date.

Unfortunately, those examples are not very helpful. In practice, the market observables are just Black volatility points, which can be interpolated to volatility slices for each expiry without regards to dividends, not a full volatility surface. Discrete dividends will mostly happen between two slices: the Black volatility jump will happen on some time-interpolated data.

While the jump size is known (it must obey to the call price continuity), the question of how one should interpolate that data until the jump is far from trivial even using two flat Black volatility slices.

The most logical is to consider a model that includes discrete dividends consistently. For example, one can fully lookup the Black volatility corresponding the price of an option assuming a piecewise lognormal process with jumps at the dividend dates. It can be priced by applying a finite difference method on the PDE. Alternatively, Bos & Vandermark propose a simple spot and strike adjusted Black formula that obey the continuity requirement (the Lehman model), which, in practice, stays quite close to the piecewise lognormal model price. Another possibility is to rely on a forward modelling of the dividends, as in Buehler (if one is comfortable with the idea that the option price will then depend ultimately on dividends past the option expiry).

Recently, a Wilmott article suggested to only rely on the jump adjustment, but did not really mention how to find the volatility just before or just after the dividend. Here is an illustration of how those assumptions can change the volatility in between slices using two dividends at T=0.9 and T=1.1.

In the first graph, we just interpolate linearly in forward moneyness the pure vol from the Bos & Vandermark formula, as it should be continuous with the forward (the PDE would give nearly the same result) and compute the equivalent Black volatility (and thus the jump at the dividend dates).

In the second graph, we interpolate linearly the two Black slices, until we find a dividend, at which point we impose the jump condition and repeat the process until the next slice. We process forward (while the Wilmott article processes backward) as it seemed a bit more natural to make the interpolation not depend on future dividends. Processing backward would just make the last part flat and first part down-slopping. On this example backward would be closer to the Bos Black volatility, but when the dividends are near the first slice, the opposite becomes true.

While the scale of those changes is not that large on the example considered, the choice can make quite a difference in the price of structures that depend on the volatility in between slices. A recent example I encountered is the variance swap when one includes adjustment for discrete dividends (then the prices just after the dividend date are used).

To conclude, if one wants to use the classic Black formula everywhere, the volatility must jump at the dividend dates. Interpolation in time is then not straightforward and one will need to rely on a consistent model to interpolate. It is not exactly clear then why would anyone stay with the Black formula except familiarity.

Tuesday, November 18, 2014

Machine Learning & Quantitative Finance

There is an interesting course on Machine Learning on Coursera, it does not require much knowledge and yet manages to teach quite a lot.

I was struck by the fact that most techniques and ideas apply also to problems in quantitative finance.

While it sounds like a straightforward remark, I have found that people (including myself) tend to do the same mistakes in finance. We might use some quadrature, find out it does not perform that well in some cases, replace it with another one that behaves a bit better, without investigating the real issue: why does the first quadrature break? is the new quadrature really fixing the issue?

I was struck by the fact that most techniques and ideas apply also to problems in quantitative finance.

- Linear regression: used for example in the Longstaff-Schwartz approach to price Bermudan options with Monte-Carlo. Interestingly the teacher insists on feature normalization, something we can forget easily, especially with the polynomial features.

- Gradient descent: one of the most basic minimizer and we use minimizers all the time for model calibration.

- Regularization: in finance, this is sometimes used to smooth out the volatility surface, or can be useful to add stability in calibration. The lessons are very practical, they explain well how to find the right value of the regularization parameter.

- Neural networks: calibrating a model is very much like training a neural network. The backpropagation is the same thing as the adjoint differentiation. It's very interesting to see that it is a key feature for Neural networks, otherwise training would be much too slow and Neural networks would not be practical. Once the network is trained, it is evaluated relatively quickly forward. It's basically the same thing as calibration and then pricing.

- Support vector machines: A gaussian kernel is often used to represent the frontier. We find the same idea in the particle Monte-Carlo method.

- Principal component analysis: can be applied to the covariance matrix square root in Monte-Carlo simulations, or to "compress" large baskets, as well as for portfolio risk.

While it sounds like a straightforward remark, I have found that people (including myself) tend to do the same mistakes in finance. We might use some quadrature, find out it does not perform that well in some cases, replace it with another one that behaves a bit better, without investigating the real issue: why does the first quadrature break? is the new quadrature really fixing the issue?

Machine Learning & Quantitative Finance

There is an interesting course on Machine Learning on Coursera, it does not require much knowledge and yet manages to teach quite a lot.

I was struck by the fact that most techniques and ideas apply also to problems in quantitative finance.

While it sounds like a straightforward remark, I have found that people (including myself) tend to do the same mistakes in finance. We might use some quadrature, find out it does not perform that well in some cases, replace it with another one that behaves a bit better, without investigating the real issue: why does the first quadrature break? is the new quadrature really fixing the issue?

I was struck by the fact that most techniques and ideas apply also to problems in quantitative finance.

- Linear regression: used for example in the Longstaff-Schwartz approach to price Bermudan options with Monte-Carlo. Interestingly the teacher insists on feature normalization, something we can forget easily, especially with the polynomial features.

- Gradient descent: one of the most basic minimizer and we use minimizers all the time for model calibration.

- Regularization: in finance, this is sometimes used to smooth out the volatility surface, or can be useful to add stability in calibration. The lessons are very practical, they explain well how to find the right value of the regularization parameter.

- Neural networks: calibrating a model is very much like training a neural network. The backpropagation is the same thing as the adjoint differentiation. It's very interesting to see that it is a key feature for Neural networks, otherwise training would be much too slow and Neural networks would not be practical. Once the network is trained, it is evaluated relatively quickly forward. It's basically the same thing as calibration and then pricing.

- Support vector machines: A gaussian kernel is often used to represent the frontier. We find the same idea in the particle Monte-Carlo method.

- Principal component analysis: can be applied to the covariance matrix square root in Monte-Carlo simulations, or to "compress" large baskets, as well as for portfolio risk.

While it sounds like a straightforward remark, I have found that people (including myself) tend to do the same mistakes in finance. We might use some quadrature, find out it does not perform that well in some cases, replace it with another one that behaves a bit better, without investigating the real issue: why does the first quadrature break? is the new quadrature really fixing the issue?

Wednesday, November 12, 2014

Pseudo-Random vs Quasi-Random Numbers

Quasi-Random numbers (like Sobol) are a relatively popular way in finance to improve the Monte-Carlo convergence compared to more classic Pseudo-Random numbers (like Mersenne-Twister). Behind the scenes one has to be a bit more careful about the dimension of the problem as the Quasi-Random numbers depends on the dimension (defined by how many random variables are independent from each other).

For a long time, Sobol was limited to 40 dimensions using the so called Bratley-Fox direction numbers (his paper actually gives the numbers for 50 dimensions). Later Lemieux gave direction numbers for up to 360 dimensions. Then, P. Jäckel proposed some extension with a random initialization of the direction vectors in his book from 2006. And finally Joe & Kuo published direction numbers for up to 21200 dimensions.

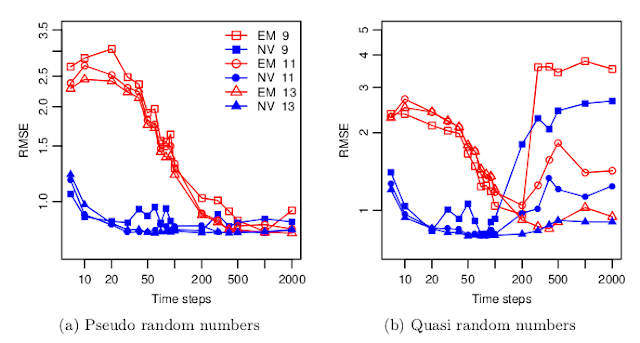

But there are very few studies about how good are real world simulations with so many quasi-random dimensions. A recent paper "Fast Ninomiya-Victoir Calibration of the Double-Mean-Reverting Model" by Bayer, Gatheral & Karlsmark tests this for once, and the results are not so pretty:

With their model, the convergence with Sobol numbers becomes worse when the number of time-steps increases, that is when the number of dimension increases. There seems to be even a threshold around 100 time steps (=300 dimensions for Euler) beyond which a much higher number of paths (2^13) is necessary to restore a proper convergence. And they use the latest and greatest Joe-Kuo direction numbers.

Still the total number of paths is not that high compared to what I am usually using (2^13 = 8192). It's an interesting aspect of their paper: the calibration with a low number of paths.

For a long time, Sobol was limited to 40 dimensions using the so called Bratley-Fox direction numbers (his paper actually gives the numbers for 50 dimensions). Later Lemieux gave direction numbers for up to 360 dimensions. Then, P. Jäckel proposed some extension with a random initialization of the direction vectors in his book from 2006. And finally Joe & Kuo published direction numbers for up to 21200 dimensions.

But there are very few studies about how good are real world simulations with so many quasi-random dimensions. A recent paper "Fast Ninomiya-Victoir Calibration of the Double-Mean-Reverting Model" by Bayer, Gatheral & Karlsmark tests this for once, and the results are not so pretty:

With their model, the convergence with Sobol numbers becomes worse when the number of time-steps increases, that is when the number of dimension increases. There seems to be even a threshold around 100 time steps (=300 dimensions for Euler) beyond which a much higher number of paths (2^13) is necessary to restore a proper convergence. And they use the latest and greatest Joe-Kuo direction numbers.

Still the total number of paths is not that high compared to what I am usually using (2^13 = 8192). It's an interesting aspect of their paper: the calibration with a low number of paths.

Pseudo-Random vs Quasi-Random Numbers

Quasi-Random numbers (like Sobol) are a relatively popular way in finance to improve the Monte-Carlo convergence compared to more classic Pseudo-Random numbers (like Mersenne-Twister). Behind the scenes one has to be a bit more careful about the dimension of the problem as the Quasi-Random numbers depends on the dimension (defined by how many random variables are independent from each other).

For a long time, Sobol was limited to 40 dimensions using the so called Bratley-Fox direction numbers (his paper actually gives the numbers for 50 dimensions). Later Lemieux gave direction numbers for up to 360 dimensions. Then, P. Jäckel proposed some extension with a random initialization of the direction vectors in his book from 2006. And finally Joe & Kuo published direction numbers for up to 21200 dimensions.

But there are very few studies about how good are real world simulations with so many quasi-random dimensions. A recent paper "Fast Ninomiya-Victoir Calibration of the Double-Mean-Reverting Model" by Bayer, Gatheral & Karlsmark tests this for once, and the results are not so pretty:

With their model, the convergence with Sobol numbers becomes worse when the number of time-steps increases, that is when the number of dimension increases. There seems to be even a threshold around 100 time steps (=300 dimensions for Euler) beyond which a much higher number of paths (2^13) is necessary to restore a proper convergence. And they use the latest and greatest Joe-Kuo direction numbers.

Still the total number of paths is not that high compared to what I am usually using (2^13 = 8192). It's an interesting aspect of their paper: the calibration with a low number of paths.

For a long time, Sobol was limited to 40 dimensions using the so called Bratley-Fox direction numbers (his paper actually gives the numbers for 50 dimensions). Later Lemieux gave direction numbers for up to 360 dimensions. Then, P. Jäckel proposed some extension with a random initialization of the direction vectors in his book from 2006. And finally Joe & Kuo published direction numbers for up to 21200 dimensions.

But there are very few studies about how good are real world simulations with so many quasi-random dimensions. A recent paper "Fast Ninomiya-Victoir Calibration of the Double-Mean-Reverting Model" by Bayer, Gatheral & Karlsmark tests this for once, and the results are not so pretty:

With their model, the convergence with Sobol numbers becomes worse when the number of time-steps increases, that is when the number of dimension increases. There seems to be even a threshold around 100 time steps (=300 dimensions for Euler) beyond which a much higher number of paths (2^13) is necessary to restore a proper convergence. And they use the latest and greatest Joe-Kuo direction numbers.

Still the total number of paths is not that high compared to what I am usually using (2^13 = 8192). It's an interesting aspect of their paper: the calibration with a low number of paths.

Wednesday, November 05, 2014

Integrating an oscillatory function

Recently, some instabilities were noticed in the Carr-Lee seasoned volatility swap price in some situations.

The Carr-Lee seasoned volatility swap price involve the computation of a double integral. The inner integral is really the problematic one as the integrand can be highly oscillating.

I first found a somewhat stable behavior using a specific adaptive Gauss-Lobatto implementation (the one from Espelid) and a change of variable. But it was not very satisfying to see that the outer integral was stable only with another specific adaptive Gauss-Lobatto (the one from Gander & Gauschi, present in Quantlib). I tried various choices of adaptive (coteda, modsim, adaptsim,...) or brute force trapezoidal integration, but either they were order of magnitudes slower or unstable in some cases. Just using the same Gauss-Lobatto implementation for both would fail...

I then noticed you could write the integral as a Fourier transform as well, allowing the use of FFT. Unfortunately, while this worked, it turned out to require a very large number of points for a reasonable accuracy. This, plus the tricky part of defining the proper step size, makes the method not so practical.

I had heard before of the Filon quadrature, which I thought was more of a curiosity. The main idea is to integrate exactly x^n * cos(k*x). One then relies on a piecewise parabolic approximation of the function f to integrate f(x) * cos(k*x). Interestingly, a very similar idea has been used in the Sali quadrature method for option pricing, except one integrates exactly x^n * exp(-k*x^2).

It turned out to be remarkable on that problem, combined with a simple adaptive Simpson like method to find the right discretization. Then as if by magic, any outer integration quadrature worked.

The Carr-Lee seasoned volatility swap price involve the computation of a double integral. The inner integral is really the problematic one as the integrand can be highly oscillating.

I first found a somewhat stable behavior using a specific adaptive Gauss-Lobatto implementation (the one from Espelid) and a change of variable. But it was not very satisfying to see that the outer integral was stable only with another specific adaptive Gauss-Lobatto (the one from Gander & Gauschi, present in Quantlib). I tried various choices of adaptive (coteda, modsim, adaptsim,...) or brute force trapezoidal integration, but either they were order of magnitudes slower or unstable in some cases. Just using the same Gauss-Lobatto implementation for both would fail...

I then noticed you could write the integral as a Fourier transform as well, allowing the use of FFT. Unfortunately, while this worked, it turned out to require a very large number of points for a reasonable accuracy. This, plus the tricky part of defining the proper step size, makes the method not so practical.

I had heard before of the Filon quadrature, which I thought was more of a curiosity. The main idea is to integrate exactly x^n * cos(k*x). One then relies on a piecewise parabolic approximation of the function f to integrate f(x) * cos(k*x). Interestingly, a very similar idea has been used in the Sali quadrature method for option pricing, except one integrates exactly x^n * exp(-k*x^2).

It turned out to be remarkable on that problem, combined with a simple adaptive Simpson like method to find the right discretization. Then as if by magic, any outer integration quadrature worked.

Integrating an oscillatory function

Recently, some instabilities were noticed in the Carr-Lee seasoned volatility swap price in some situations.

The Carr-Lee seasoned volatility swap price involve the computation of a double integral. The inner integral is really the problematic one as the integrand can be highly oscillating.

I first found a somewhat stable behavior using a specific adaptive Gauss-Lobatto implementation (the one from Espelid) and a change of variable. But it was not very satisfying to see that the outer integral was stable only with another specific adaptive Gauss-Lobatto (the one from Gander & Gauschi, present in Quantlib). I tried various choices of adaptive (coteda, modsim, adaptsim,...) or brute force trapezoidal integration, but either they were order of magnitudes slower or unstable in some cases. Just using the same Gauss-Lobatto implementation for both would fail...

I then noticed you could write the integral as a Fourier transform as well, allowing the use of FFT. Unfortunately, while this worked, it turned out to require a very large number of points for a reasonable accuracy. This, plus the tricky part of defining the proper step size, makes the method not so practical.

I had heard before of the Filon quadrature, which I thought was more of a curiosity. The main idea is to integrate exactly x^n * cos(k*x). One then relies on a piecewise parabolic approximation of the function f to integrate f(x) * cos(k*x). Interestingly, a very similar idea has been used in the Sali quadrature method for option pricing, except one integrates exactly x^n * exp(-k*x^2).

It turned out to be remarkable on that problem, combined with a simple adaptive Simpson like method to find the right discretization. Then as if by magic, any outer integration quadrature worked.

The Carr-Lee seasoned volatility swap price involve the computation of a double integral. The inner integral is really the problematic one as the integrand can be highly oscillating.

I first found a somewhat stable behavior using a specific adaptive Gauss-Lobatto implementation (the one from Espelid) and a change of variable. But it was not very satisfying to see that the outer integral was stable only with another specific adaptive Gauss-Lobatto (the one from Gander & Gauschi, present in Quantlib). I tried various choices of adaptive (coteda, modsim, adaptsim,...) or brute force trapezoidal integration, but either they were order of magnitudes slower or unstable in some cases. Just using the same Gauss-Lobatto implementation for both would fail...

I then noticed you could write the integral as a Fourier transform as well, allowing the use of FFT. Unfortunately, while this worked, it turned out to require a very large number of points for a reasonable accuracy. This, plus the tricky part of defining the proper step size, makes the method not so practical.

I had heard before of the Filon quadrature, which I thought was more of a curiosity. The main idea is to integrate exactly x^n * cos(k*x). One then relies on a piecewise parabolic approximation of the function f to integrate f(x) * cos(k*x). Interestingly, a very similar idea has been used in the Sali quadrature method for option pricing, except one integrates exactly x^n * exp(-k*x^2).

It turned out to be remarkable on that problem, combined with a simple adaptive Simpson like method to find the right discretization. Then as if by magic, any outer integration quadrature worked.

Monday, November 03, 2014

The elusive reference: the Lamperti transform

Without knowing that it was a well known general concept, I first noticed the use of the Lamperti transform in the Andersen-Piterbarg "Interest rate modeling" book p292 "finite difference solutions for general phi".

Pat Hagan used that transformation for a better discretization of the arbitrage free SABR PDE model.

I then started to notice the use of this transformation in many more papers. The first one I saw naming it "Lamperti transform" was the paper from Ait-Sahalia "Maximum likelyhood estimation of discretely sampled diffusions: a closed-form approximation approach". Recently those closed form formulae have been applied to the quadrature method (where one integrates the transition density by a quadrature rule) in "Advancing the universality of quadrature methods to any underlying process for option pricing". There is also a recent interesting application to Monte-Carlo simulation in "Unbiased Estimation with Square Root Convergence for SDE Models".

So the range of practical applications is quite large. But there was still no reference. A google search pointed me to a well written paper that describes the application of the Lamperti transform to various stochastic differential equations, showing its limits "From State Dependent Diffusion to Constant Diffusion in Stochastic Differential Equations by the Lamperti Transform".

Gary then blogged about the Lamperti transform and various papers from Lamperti, but does not say which one is the source.

After going through some, I noticed that Lamperti's 1964 "A simple construction of certain diffusion processes" seemed to be the closest, even though it seems to go beyond stochastic differential equations.

Today, I found a paper referencing this paper explicitly when presenting the transformation of a stochastic process to a unit diffusion in "Density estimates for solutions to one dimensional Backward SDE's". In addition it also references one exercise of the Karatzas-Schreve book "Brownian motion and Stochastic calculus", which presents again the same idea, without calling it Lamperti transform.

The elusive reference: the Lamperti transform

Without knowing that it was a well known general concept, I first noticed the use of the Lamperti transform in the Andersen-Piterbarg "Interest rate modeling" book p292 "finite difference solutions for general phi".

Pat Hagan used that transformation for a better discretization of the arbitrage free SABR PDE model.

I then started to notice the use of this transformation in many more papers. The first one I saw naming it "Lamperti transform" was the paper from Ait-Sahalia "Maximum likelyhood estimation of discretely sampled diffusions: a closed-form approximation approach". Recently those closed form formulae have been applied to the quadrature method (where one integrates the transition density by a quadrature rule) in "Advancing the universality of quadrature methods to any underlying process for option pricing". There is also a recent interesting application to Monte-Carlo simulation in "Unbiased Estimation with Square Root Convergence for SDE Models".

So the range of practical applications is quite large. But there was still no reference. A google search pointed me to a well written paper that describes the application of the Lamperti transform to various stochastic differential equations, showing its limits "From State Dependent Diffusion to Constant Diffusion in Stochastic Differential Equations by the Lamperti Transform".

Gary then blogged about the Lamperti transform and various papers from Lamperti, but does not say which one is the source.

After going through some, I noticed that Lamperti's 1964 "A simple construction of certain diffusion processes" seemed to be the closest, even though it seems to go beyond stochastic differential equations.

Today, I found a paper referencing this paper explicitly when presenting the transformation of a stochastic process to a unit diffusion in "Density estimates for solutions to one dimensional Backward SDE's". In addition it also references one exercise of the Karatzas-Schreve book "Brownian motion and Stochastic calculus", which presents again the same idea, without calling it Lamperti transform.

Subscribe to:

Comments

(

Atom

)