Quasi-Random numbers (like Sobol) are a relatively popular way in finance to improve the Monte-Carlo convergence compared to more classic Pseudo-Random numbers (like Mersenne-Twister). Behind the scenes one has to be a bit more careful about the dimension of the problem as the Quasi-Random numbers depends on the dimension (defined by how many random variables are independent from each other).

For a long time, Sobol was limited to 40 dimensions using the so called Bratley-Fox direction numbers (his paper actually gives the numbers for 50 dimensions). Later Lemieux gave direction numbers for up to 360 dimensions. Then, P. Jäckel proposed some extension with a random initialization of the direction vectors in his book from 2006. And finally Joe & Kuo published direction numbers for up to 21200 dimensions.

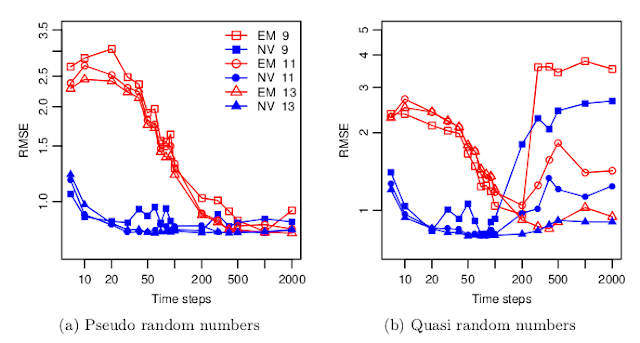

But there are very few studies about how good are real world simulations with so many quasi-random dimensions. A recent paper "Fast Ninomiya-Victoir Calibration of the Double-Mean-Reverting Model" by Bayer, Gatheral & Karlsmark tests this for once, and the results are not so pretty:

With their model, the convergence with Sobol numbers becomes worse when the number of time-steps increases, that is when the number of dimension increases. There seems to be even a threshold around 100 time steps (=300 dimensions for Euler) beyond which a much higher number of paths (2^13) is necessary to restore a proper convergence. And they use the latest and greatest Joe-Kuo direction numbers.

Still the total number of paths is not that high compared to what I am usually using (2^13 = 8192). It's an interesting aspect of their paper: the calibration with a low number of paths.

No comments :

Post a Comment